January 18, 2018 | Alumni

What's it like to develop Google’s latest AR technology? Ask this U of T alumnus

By Nina Haikara

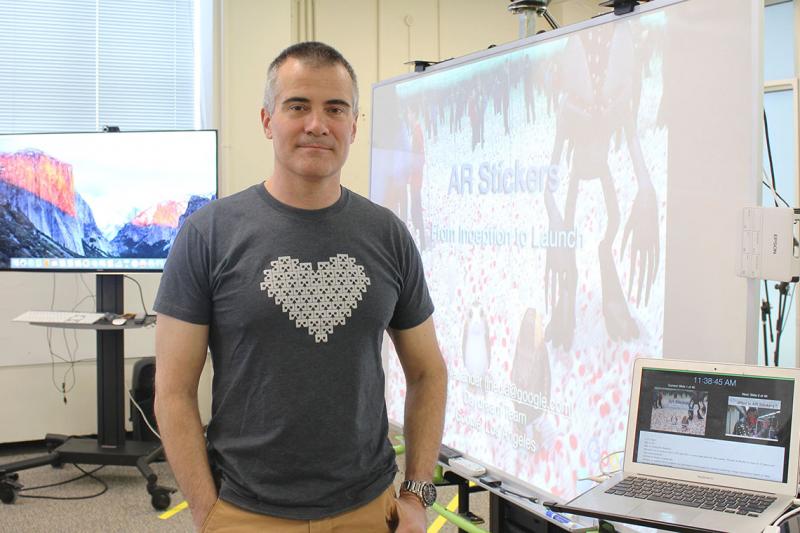

U of T alumnus Ivan Neulander gives students a technical overview of the Google’s augmented reality (AR) stickers at the Department of Computer Science Innovation Lab (photo by Ryan Perez)

After completing his undergraduate and master’s degree in computer graphics at the University of Toronto, Ivan Neulander (BSc 1995 St. Mike's, MSc 1997) left his Toronto home to take a job with a visual effects company based in Los Angeles.

“Back in the late 90s, California was really the place to be for doing visual effects,” says the department of computer science graduate. “It’s become a lot more globalized now. Rhythm and Hues was the first one to make me a job offer, so the rest is history, as they say.”

The industry has seen swift change in the 20 years since. After 15 years with the company, and before Rhythm and Hues permanently closed its doors, Neulander took his computer graphics skills to a software engineering post at Google, where he started on the photos team and worked on painterly renderings.

“When I first started, VR [virtual reality] was still in its infancy. The technology wasn’t yet there, to make a truly compelling experience,” says Neulander, who works out of the famous Binoculars Building, an office space designed by Canadian-born architect Frank Gehry in downtown Los Angeles.

“I was interested primarily in computer graphics, and more specifically, photorealistic rendering. And so it lent itself really well to visual effects, to movies. And that's the industry that I pursued.

“It wasn't until 15 or so years later, when I came to Google, that the Daydream team was gathering steam and we had the tech to render things smoothly in a way that doesn't give people nausea. We could actually start using mobile devices to give everybody a pretty good VR experience.”

Neulander explains rendering as image synthesis – the creation of an image on a computer.

“The beauty of computer graphics is…the image. I like the fact that I can kind of validate what I've done just by looking at the image.”

In detail, rendering is 3D and requires a virtual camera, virtual light sources, 3D geometry (in the form of triangular meshes, textures that are applied to the surface), followed by shading tricks to ensure the surfaces look realistic, whether it's fibres, or wood, or even clouds, he says.

“It can be something that happens 60 times per second on a PlayStation 4, which gives you actually a pretty decent level of quality these days. But when you're talking about visual effects, there's a much higher bar for the quality that's expected. The shadows have to look really good. The lighting has to look realistic. Oftentimes you're inserting computer-generated content into live action footage – people interacting with CG [computer graphics] characters. And so the lighting really has to match between those two.”

Lighting a scene with "blobby" shadows or shadow "maps" are some of the problems Neulander tackled for Google’s development of AR (augmented reality) stickers, a camera mode currently available in Google’s Pixel phones, allowing users to place CG characters – including some from Star Wars, Stranger Things, among Google’s own – in the phone’s live view. It’s the newest app to be developed using Google’s ARCore technology.

Disney’s Star Wars “porg” visits the Department of Computer Science Innovation Lab via the Google AR stickers app (photo by Ryan Perez)

“I feel this is kind of a new development with mobile phones,” he says. “AR stickers are meant to be fun and kind of lighthearted. It's a neat way to get the [AR] ball rolling.”

Neulander predicts future uses could help you navigate a store, or place notes on places you want to revisit.

Could we one day augment our view by looking at buildings through our phones and gather their history?

“Being able to quickly identify buildings based on what the phone sees, is exactly the kind of problem that Google excels at. It's something that lends itself well to a deep learning solution, and there's so much training data that Google has, to feed that learning algorithm.

“Stay tuned, but I think something like that will be coming very soon.”