September 7, 2018 | Research

A recipe to save lives: Geoffrey Hinton and David Naylor call on physicians to embrace AI

By Chris Sorensen

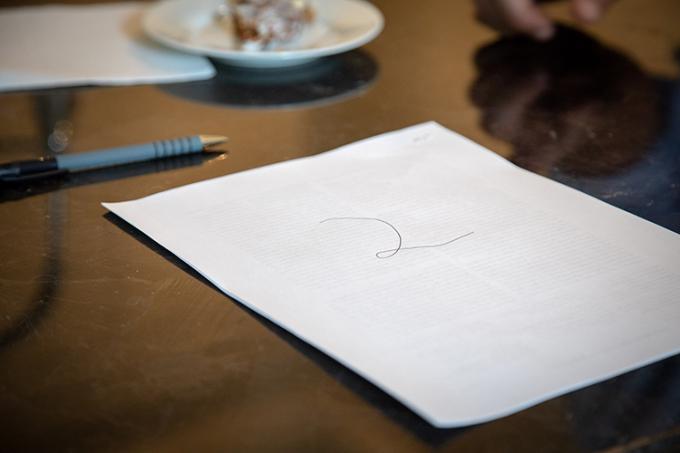

University Professor Emeritus Geoffrey Hinton and U of T President Emeritus Dr. David Naylor discuss the transformative impact of AI on health care in the kitchen of Naylor's Toronto home (photo by Lisa Lightbourn)

Deep learning “godfather” Geoffrey Hinton, who knows all too well the limitations of our health-care system, has joined forces with University of Toronto President Emeritus Dr. David Naylor (MD 1978) to communicate the life-saving potential of artificial intelligence to physicians – an effort literally hashed out over the kitchen table.

The two academic luminaries recently published companion “Viewpoint” pieces in the prestigious Journal of the American Medical Association (JAMA) that seek to demystify deep learning, a branch of AI that mimics how the human brain learns, and lay out the factors that will drive its adoption in medicine.

The one-two punch – Hinton on the technology, Naylor on factors driving its adoption in health care – was conceived by the two men after nearly a dozen meetings and untold cups of coffee in, of all places, the airy kitchen of Naylor’s midtown Toronto home.

“My hope is that a large number of doctors and medical researchers, who have no idea what this stuff is about – they don’t know what it is other than some weird form of statistics – will have an intuitive understanding of how it works,” says Hinton, a University Professor Emeritus in U of T’s computer science department who also works for Google.

“I want them to get an idea of what’s really going on inside these systems.”

Though there are many potential applications for deep learning, from self-driving cars to robotic assistants, Hinton is particularly passionate about applying the technology to health care. That's because he has personally witnessed how his lab work could save patients' lives. He lost his first wife to ovarian cancer and is now facing the tragic prospect of losing his second wife to another form of the disease.

“I have seen first-hand many of the inadequacies of the current technology,” he says.

How could deep learning help? Hinton offers, as an example, the task of tracking the growth of an irregularly shaped cancerous tumour over a period of several months when more than one radiologist is involved. One might record a measurement of 1.9 cm while another might not measure the tumour the same way and come up with a slightly different number.

“Over time, it makes for a very inconsistent longitudinal set of measurements, which makes it very hard to notice things that are going on,” Hinton says. “If you have a computer system doing this, you will at least get something that’s consistent.”

Hinton says he was first approached by JAMA about writing an article last summer. While he immediately expressed interest – “I’ve been going around for a long time saying health care is an area where this stuff is going to have a big impact” – he says he quickly realized that he needed assistance.

So he reached out to Naylor, a colleague and neighbour. In addition to being U of T’s former president, Naylor is a physician, a former dean of U of T’s Faculty of Medicine and an expert on health-care policy. He was also the head of the blue ribbon panel that examined how fundamental science is funded in Canada and, just this week, was named interim CEO of the Hospital for Sick Children.

The two spent hours standing over Naylor’s kitchen island (Hinton can’t sit down because of a longstanding back injury) hammering out the best way to telegraph their gentle call to action in the pages of the world’s most widely circulated medical journal.

Beyond digital imaging, Naylor identified six other factors in his JAMA article that will spur the adoption of AI in health care. They include the digitization of health records, the ability of deep learning to deal with “heterogeneous” data sets from different sources and the possibility of using the technology to discover novel molecules that can be used to develop new pharmaceuticals or other therapeutics.

There’s also an opportunity to “streamline” physicians’ routine work – everything from collecting data from blood samples to screening images – so they can spend more time with patients and their families.

There are, however, some significant barriers to overcome before deep learning becomes a common health-care tool.

Naylor, for one, predicts “all the usual forms of resistance,” ranging from concerns about lost jobs to who gets sued if an algorithm makes a mistake.

“There’s an enormous amount of inertia in the system that could slow down adoption,” he says. “One of the forces is about evidence-based medicine and the belief that we need randomized trials for everything.

“But most of these [technologies] are in the realm of diagnostic tests or predictive indices. They’re not reasonably subject to the same type of testing, although they do have to be validated and implemented carefully and assessed for their impact.”

Another frequently voiced concern is the “black box” nature of such systems. Whereas conventional software applications are programmed to follow a specific path when making a decision based on a set of inputs, neural networks are left to figure out their own way of finding the right answer. In other words, it’s not always possible to know why the computer made the decision it did – a prospect that initially sounds troubling when it comes to aiding physicians who are making potentially life-altering diagnoses.

However, Hinton stresses it’s no different when a human radiologist is involved. To illustrate his point, he borrows a pen and scrawls a “2” on a piece of paper.

“Explain what is it about this that makes it a two,” he says. “Now, if you push people, they will start to tell you what makes it a two, but I can show you [written] twos that have none of the characteristics that you say makes this a two.”

The take-away, he continues, is that everybody – radiologists included – routinely make determinations based on information before them without knowing, exactly, how or why they made them.

For patients and their families, meanwhile, the most important aspect of any diagnosis will be its accuracy and timeliness – both areas where deep learning is expected to have a clear edge. In his JAMA article, Hinton refers to a 2017 study done by Stanford researchers that found today’s neural networks are already able to identify cancerous lesions with the same accuracy as trained dermatologists.

Moreover, the neural networks have the capacity to improve their accuracy as they are fed more examples.

“You have the capacity to learn from hundreds of thousands of images and correct over time as more information comes in,” Naylor says.

“That’s pretty hard to beat in terms of its capacity to improve health care.”